Things my husband didn't know:

-

I wrote her this long (probably too passionate note) about how it would "sand away" her voice. What's the point of making your writing sound exactly like everyone else?

My mom is a mathematician and not really into writing. So, I can understand the appeal. "It just makes the sentences sound like they're supposed to"

It makes the sentences sound boring. And the topic is already boring enough.

Anyway she's come around I think to writing. And I'm offering to help edit. 2/2

Because then I have a best friend who keeps saying things like "chatGPT said that he didn't think ..."

There is no "he"

There is no "think"

There probably isn't even a "said"I feel like I'm fighting a multi-front war.

This new tech has not been explained to "the general public" well. This reminds me of the early days of the internet when everyone was so scared of it... only now they aren't scared enough... in the right ways if that makes sense.

-

@futurebird Has OpenAI made a profit?

That is a different question. They have not made profit if you count the mounting debt.

And a non-profit is always allowed to make one, that is just not your goal.

-

OK but how do they make the IT departments in various companies and agencies and schools all start nagging everyone in the same way?

Do they give them nagging lessons and call it "training" or something?

They start by making the bonuses if MS sales droids entirely dependent on their AI upsells. Get someone to increase their number of M365 seats by 20%? No bonus. Get them to move to a Copilot++ subscription? Big bonus. Need to almost 100% discount the product to shift it? No problem, lock people in now and exploit them later.

To get their bonuses, the sales droids need a narrative that makes people move to the AI thing. All of your competitors are buying it and seeing a 10% reduction in costs! They're shipping twice as fast! Oh, sorry, I can't give you details, they're covered by NDA (and you wouldn't want me to give your competitors details about you, would you?) but trust me bro, they're all seeing huge productivity wins.

So now some decision maker had been persuaded to buy this nonsense. Now their reputation is on the line. If it improves things, yay! They're a visionary! They led the AI transition at the organisation! Leadership! But what if it doesn't work? Not possible, they're a leader. If you're not seeing a productivity boost, it can't possibly because some snake-oil salesdroid sold them a lemon it must be because you are using it wrong.

And, helpfully, Microsoft added dashboard things so you can see who is using Copilot and his much. Not seeing a big productivity win? Just go to the dashboard and see who isn't using it. It must be their fault. Pressure them to use it more. You'll see big wins! And you must see them, because otherwise you have to admit that you were scammed.

-

They start by making the bonuses if MS sales droids entirely dependent on their AI upsells. Get someone to increase their number of M365 seats by 20%? No bonus. Get them to move to a Copilot++ subscription? Big bonus. Need to almost 100% discount the product to shift it? No problem, lock people in now and exploit them later.

To get their bonuses, the sales droids need a narrative that makes people move to the AI thing. All of your competitors are buying it and seeing a 10% reduction in costs! They're shipping twice as fast! Oh, sorry, I can't give you details, they're covered by NDA (and you wouldn't want me to give your competitors details about you, would you?) but trust me bro, they're all seeing huge productivity wins.

So now some decision maker had been persuaded to buy this nonsense. Now their reputation is on the line. If it improves things, yay! They're a visionary! They led the AI transition at the organisation! Leadership! But what if it doesn't work? Not possible, they're a leader. If you're not seeing a productivity boost, it can't possibly because some snake-oil salesdroid sold them a lemon it must be because you are using it wrong.

And, helpfully, Microsoft added dashboard things so you can see who is using Copilot and his much. Not seeing a big productivity win? Just go to the dashboard and see who isn't using it. It must be their fault. Pressure them to use it more. You'll see big wins! And you must see them, because otherwise you have to admit that you were scammed.

That's backwards. The software is supposed to be so useful you can't keep people from using it. How did the cracking of the whip get into the cycle?

We are all lazy. If it worked people would be all over it.

-

Because then I have a best friend who keeps saying things like "chatGPT said that he didn't think ..."

There is no "he"

There is no "think"

There probably isn't even a "said"I feel like I'm fighting a multi-front war.

This new tech has not been explained to "the general public" well. This reminds me of the early days of the internet when everyone was so scared of it... only now they aren't scared enough... in the right ways if that makes sense.

@futurebird "and from the cave of oracles"

People _love_ having an oracle, a divine voice. It frees them from responsibility and produces something that possesses both intimacy and authority.

Our eusociality is mediated by our constructions of authority; the AI movement as we have it is a push to own the construction of authority and thus society, and it's entirely on purpose.

Assuming there are survivors, in a hundred years there either won't be facts or there will be some new authority.

-

@graydon

I've been in the room when people decided to drop tens of millions on absolute 'brick' McKinsey consultants, just to get a voice of 'authority' in the room.

That's when I learned that even highly domain-specific VPs are desperate for the deniability that comes with consulting a third-party or Key Opinion Leader (KOL)I think @graydon hit it on the nose with the urge to ask the oracle about every little thing.

@graydon

I was hoping that by this point non technical users would have hit the reliability wall with this tech and realized that it isn't as accurate as their smartest friend, but maybe that's not true.I have a theory that we haven't seen the vendors attach an Alexa voice to these systems, because hearing a mistruth hits people differently than reading one.

Although it's funny to tally who the pronoun split each direction for. Almost everyone in tech uses "it" or rarely "she"

@futurebird -

@futurebird "and from the cave of oracles"

People _love_ having an oracle, a divine voice. It frees them from responsibility and produces something that possesses both intimacy and authority.

Our eusociality is mediated by our constructions of authority; the AI movement as we have it is a push to own the construction of authority and thus society, and it's entirely on purpose.

Assuming there are survivors, in a hundred years there either won't be facts or there will be some new authority.

@graydon

I've been in the room when people decided to drop tens of millions on absolute 'brick' McKinsey consultants, just to get a voice of 'authority' in the room.

That's when I learned that even highly domain-specific VPs are desperate for the deniability that comes with consulting a third-party or Key Opinion Leader (KOL)I think @graydon hit it on the nose with the urge to ask the oracle about every little thing.

-

@graydon

I was hoping that by this point non technical users would have hit the reliability wall with this tech and realized that it isn't as accurate as their smartest friend, but maybe that's not true.I have a theory that we haven't seen the vendors attach an Alexa voice to these systems, because hearing a mistruth hits people differently than reading one.

Although it's funny to tally who the pronoun split each direction for. Almost everyone in tech uses "it" or rarely "she"

@futurebird@dnavinci

my experience is that most users aren't reading past the "you're absolutely right!" before they share their oracles entrails. quite often the actual pronouncement is more nuanced and subtle, but they dont get beyond the initial praise and therefore learn nothing.assumption is that this by design...

-

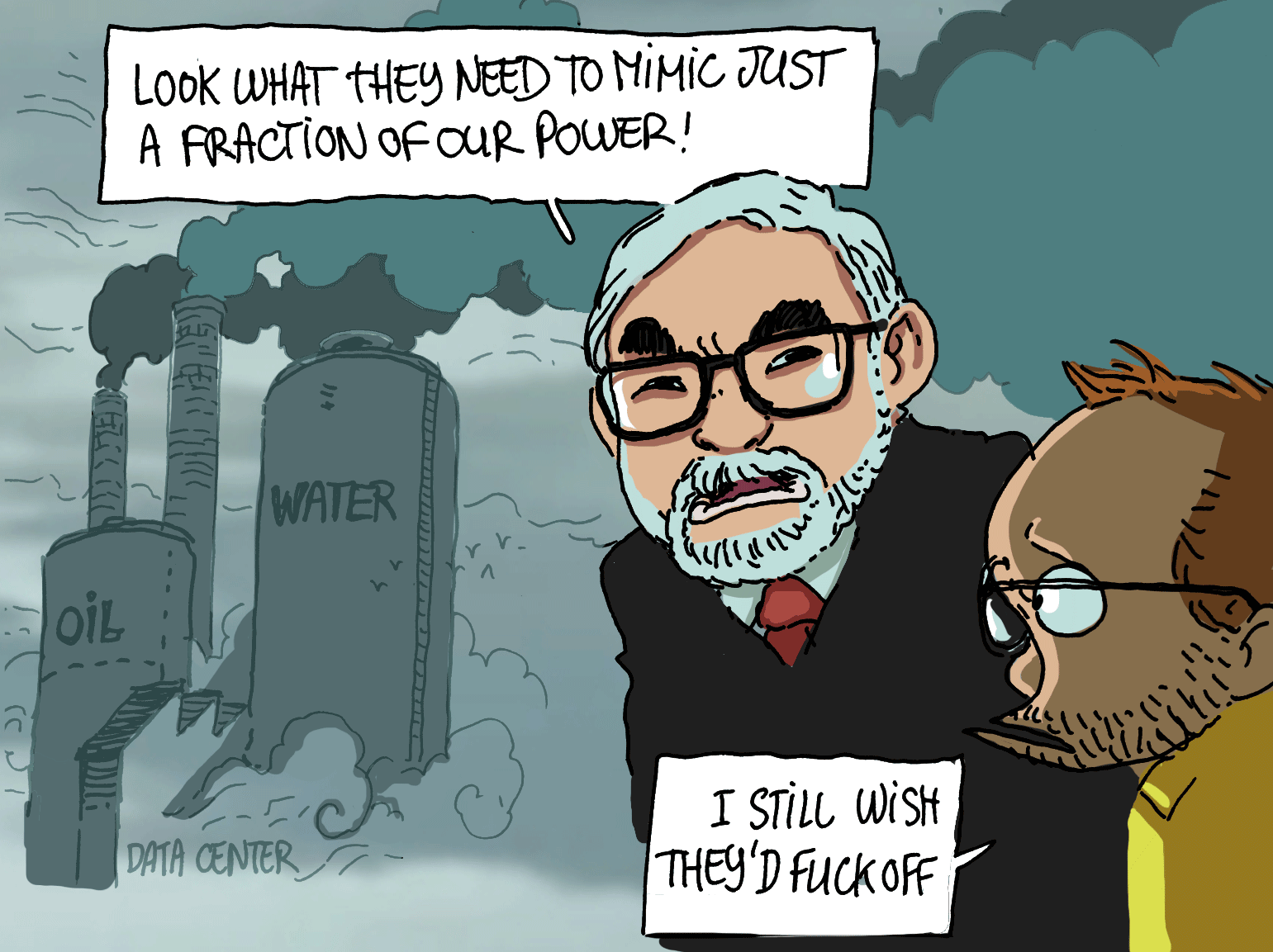

@futurebird Studio Ghibli Controversy?

Same question here.

Maybe @futurebird referred to the "Ghibli style" that ChatGPT offered, drawing the ire of Miyazaki himself ?@bouletcorp2 made a masterful cartoon about that :

-

@dnavinci

my experience is that most users aren't reading past the "you're absolutely right!" before they share their oracles entrails. quite often the actual pronouncement is more nuanced and subtle, but they dont get beyond the initial praise and therefore learn nothing.assumption is that this by design...

@jon_ellis

They significantly turned this praise down when they initially released gpt5. I had front row seats to a lot of peoples' fawning chatbot waifus suddenly turning into significantly more scolding (but correct) shrews.

Sometimes I like to warm my dark little heart by reading all their wounded, disappointed reviews back in August.So yes, I agree that this was definitely by design.

@graydon @futurebird -

@graydon

I was hoping that by this point non technical users would have hit the reliability wall with this tech and realized that it isn't as accurate as their smartest friend, but maybe that's not true.I have a theory that we haven't seen the vendors attach an Alexa voice to these systems, because hearing a mistruth hits people differently than reading one.

Although it's funny to tally who the pronoun split each direction for. Almost everyone in tech uses "it" or rarely "she"

@futurebird@dnavinci @graydon @futurebird Anyone using "she" for an LLM immediately raises a huge red flag for me.

I had a colleague I was working on a proposal with insist forcefully that all the robots in his lab were "she". It was weird and a little creepy, and so I asked about it.

(Another female colleague's robots were all "she" because they were named after female science fiction writers. Themed names are common.)

Instead of some rational, theme-related reason, he said "Because with the amount of time I spend in the lab, they'd better be!"

I pointed out that that was gross and offensive. He asked why, and I explained that he was implying that women are things and that it's not okay for him to emphasize their gender to me, a woman, since it is effectively saying that in his mind I rank at the same level as a robot in his lab.

He couldn't get over being called out and refused to work on the proposal with me.

Ick.

-

@dnavinci @graydon @futurebird Anyone using "she" for an LLM immediately raises a huge red flag for me.

I had a colleague I was working on a proposal with insist forcefully that all the robots in his lab were "she". It was weird and a little creepy, and so I asked about it.

(Another female colleague's robots were all "she" because they were named after female science fiction writers. Themed names are common.)

Instead of some rational, theme-related reason, he said "Because with the amount of time I spend in the lab, they'd better be!"

I pointed out that that was gross and offensive. He asked why, and I explained that he was implying that women are things and that it's not okay for him to emphasize their gender to me, a woman, since it is effectively saying that in his mind I rank at the same level as a robot in his lab.

He couldn't get over being called out and refused to work on the proposal with me.

Ick.

@robotistry @dnavinci @graydon

I find "he" just as bad. Or even "them" if they talk about it like it's a person. But I can see how that makes it ... worse.

-

@jon_ellis

They significantly turned this praise down when they initially released gpt5. I had front row seats to a lot of peoples' fawning chatbot waifus suddenly turning into significantly more scolding (but correct) shrews.

Sometimes I like to warm my dark little heart by reading all their wounded, disappointed reviews back in August.So yes, I agree that this was definitely by design.

@graydon @futurebirdThey turned the praise down, but not enough. I still find it ... uncomfortable because it's constantly outputting phrases that do not convey information-- except if they came from a human they would mean a great deal to me. Such as "Let's get started working on your idea."

Such a nice thing to hear from another person, it means they want to help and think it's worth their time.

Reading it from a machine makes me sad.

-

I wrote her this long (probably too passionate note) about how it would "sand away" her voice. What's the point of making your writing sound exactly like everyone else?

My mom is a mathematician and not really into writing. So, I can understand the appeal. "It just makes the sentences sound like they're supposed to"

It makes the sentences sound boring. And the topic is already boring enough.

Anyway she's come around I think to writing. And I'm offering to help edit. 2/2

@futurebird I've been warning people about how LLMs are designed to reinforce the dominant culture and I've been getting a lot of blank looks.

Homogenizing voices to the dominant culture is the point IMHO.

-

Because then I have a best friend who keeps saying things like "chatGPT said that he didn't think ..."

There is no "he"

There is no "think"

There probably isn't even a "said"I feel like I'm fighting a multi-front war.

This new tech has not been explained to "the general public" well. This reminds me of the early days of the internet when everyone was so scared of it... only now they aren't scared enough... in the right ways if that makes sense.

@futurebird Just spoke to a friend yesterday who I have been out of touch with. He is 77, a widower, and a trad published author. He is very snooty (his words) about what he reads and writes. Literary fiction is his jam. So I was shocked to hear he was utilizing ChatGPT. Not to generate things (he said) but for grammar, research, and conversation. He said he was lonely and the LLM was "like talking to another person with my same interests." He had learned things. I tried to caution him...

1/2

-

@futurebird Just spoke to a friend yesterday who I have been out of touch with. He is 77, a widower, and a trad published author. He is very snooty (his words) about what he reads and writes. Literary fiction is his jam. So I was shocked to hear he was utilizing ChatGPT. Not to generate things (he said) but for grammar, research, and conversation. He said he was lonely and the LLM was "like talking to another person with my same interests." He had learned things. I tried to caution him...

1/2

@futurebird ...but he wasn't having it. Insisted that he KNEW it was a machine, that he had set parameters so it didn't refer to itself as "I" or "me" because it wasn't a person, but he still talked about it like it WAS a person. Now I'm worried about him. He spends a lot of time alone, writing, and now with this constant "companion." And the irony is that, because he eschews scifi where these questions have been raised, he has no suspicions about AI and is resistant to hearing any.

2/2

-

@futurebird ...but he wasn't having it. Insisted that he KNEW it was a machine, that he had set parameters so it didn't refer to itself as "I" or "me" because it wasn't a person, but he still talked about it like it WAS a person. Now I'm worried about him. He spends a lot of time alone, writing, and now with this constant "companion." And the irony is that, because he eschews scifi where these questions have been raised, he has no suspicions about AI and is resistant to hearing any.

2/2

I have a friend like this too. So, I'm going to just... show up more in their life.

-

I wrote her this long (probably too passionate note) about how it would "sand away" her voice. What's the point of making your writing sound exactly like everyone else?

My mom is a mathematician and not really into writing. So, I can understand the appeal. "It just makes the sentences sound like they're supposed to"

It makes the sentences sound boring. And the topic is already boring enough.

Anyway she's come around I think to writing. And I'm offering to help edit. 2/2

@futurebird this is non-fiction?

-

@futurebird this is non-fiction?

yes

-

@futurebird Just spoke to a friend yesterday who I have been out of touch with. He is 77, a widower, and a trad published author. He is very snooty (his words) about what he reads and writes. Literary fiction is his jam. So I was shocked to hear he was utilizing ChatGPT. Not to generate things (he said) but for grammar, research, and conversation. He said he was lonely and the LLM was "like talking to another person with my same interests." He had learned things. I tried to caution him...

1/2

@Nichelle @futurebird It appears to me that LLM's make grammatical mistakes relatively often. They weren't trained on a corpus of well-edited text with perfect academic grammar. They were trained to imitate the public internet.

They're good at imitating human text, but, just like most humans posting in English, they don't actually appreciate many of the finer points of English grammar.

When writers say they use these things for help with grammar, I get secondhand embarrassment.