This story about ChatGPT causing people to have harmful delusions has mind-blowing anecdotes.

-

I had a friend who thought chat GPT was "very helpful with criticism of poetry" ... and that's true in the sense that it will look at phrases in your poem and Frankenstein together some responses with the general form and tone of what real people have said about real poems with similar patterns.

Maybe that might give someone some ideas for revisions along stereotypical lines.

It can't scan rhythm. It could easily miss the whole point and lead you to make derivative revisions.

@futurebird @grammargirl poetry, for me, is so much tied to emotional response. A group of words, even a single word, brings to mind a whole gamut of feelings, thoughts and perceptions. All of which AI cannot have. It can only parrot the emotions others have told it of.

It is similar to the effect a song has. If I hear the first few notes of a certain song, it engenders memories of a lovely little cat I had, who was taken away far too soon. Ten seconds = tears. What can AI analyze from that?

-

@futurebird @grammargirl Like asking your mom. Which everyone tells you never to do.

@Wyatt_H_Knott @futurebird @grammargirl I implore you wonderful people to try local LLMs with an open mind. Like most powerful tools, you get out of it what you put in and it can surely be misused.

Once it's running on an aging, modest computer right next to you, it's hard to not notice the staggering implications for our relationship with society's existing power structures. I have a personal tutor right out of Star Trek that nobody can take away from me or use to spy on me. Apart from all the cool automation and human-machine interaction it opens up, for an increasing number of tasks I can completely stop using search engines.

I think the "AI is just NFTs again but more evil; it just means the inevitable acceleration of billionaire takeover and ecosystem destruction; everyone who uses it is a gullible rube at best and an enemy at worst" clickbait ecosystem is misleading and unhealthy. It fuels conspiratorial thinking, incurious stigma, and a lot of unnecessary infighting.

-

@Wyatt_H_Knott @futurebird @grammargirl I implore you wonderful people to try local LLMs with an open mind. Like most powerful tools, you get out of it what you put in and it can surely be misused.

Once it's running on an aging, modest computer right next to you, it's hard to not notice the staggering implications for our relationship with society's existing power structures. I have a personal tutor right out of Star Trek that nobody can take away from me or use to spy on me. Apart from all the cool automation and human-machine interaction it opens up, for an increasing number of tasks I can completely stop using search engines.

I think the "AI is just NFTs again but more evil; it just means the inevitable acceleration of billionaire takeover and ecosystem destruction; everyone who uses it is a gullible rube at best and an enemy at worst" clickbait ecosystem is misleading and unhealthy. It fuels conspiratorial thinking, incurious stigma, and a lot of unnecessary infighting.

-

@Wifiwits @futurebird @Wyatt_H_Knott @grammargirl I'm trying to have a real conversation about an important subject, can you please be a child elsewhere?

-

@Wyatt_H_Knott @futurebird @grammargirl I implore you wonderful people to try local LLMs with an open mind. Like most powerful tools, you get out of it what you put in and it can surely be misused.

Once it's running on an aging, modest computer right next to you, it's hard to not notice the staggering implications for our relationship with society's existing power structures. I have a personal tutor right out of Star Trek that nobody can take away from me or use to spy on me. Apart from all the cool automation and human-machine interaction it opens up, for an increasing number of tasks I can completely stop using search engines.

I think the "AI is just NFTs again but more evil; it just means the inevitable acceleration of billionaire takeover and ecosystem destruction; everyone who uses it is a gullible rube at best and an enemy at worst" clickbait ecosystem is misleading and unhealthy. It fuels conspiratorial thinking, incurious stigma, and a lot of unnecessary infighting.

@necedema @Wyatt_H_Knott @grammargirl

Do “local LLMs” use training data compiled locally only? Or do you have a copy (a snapshot) of the associative matrices used by cloud based LLMs stored locally so you can run LLM prompts without an internet connection?

-

@necedema @Wyatt_H_Knott @grammargirl

Do “local LLMs” use training data compiled locally only? Or do you have a copy (a snapshot) of the associative matrices used by cloud based LLMs stored locally so you can run LLM prompts without an internet connection?

@futurebird @necedema @Wyatt_H_Knott @grammargirl If you have the memory & compute power on your local machine to actually run it, you can download the whole thing and run it locally, completely disconnected. It's conceivable that you could use only local training data, but good luck gathering enough local data. Also, training it would be unbelievably time-consuming if you don't use the cloud and you want anything robust. What's usually done is you fine-tune a pre-trained model on your own data, and then feed it local data and system prompts to make the responses appropriate to your use case.

-

@futurebird @necedema @Wyatt_H_Knott @grammargirl If you have the memory & compute power on your local machine to actually run it, you can download the whole thing and run it locally, completely disconnected. It's conceivable that you could use only local training data, but good luck gathering enough local data. Also, training it would be unbelievably time-consuming if you don't use the cloud and you want anything robust. What's usually done is you fine-tune a pre-trained model on your own data, and then feed it local data and system prompts to make the responses appropriate to your use case.

@hosford42 @necedema @Wyatt_H_Knott @grammargirl

So, almost no one is using this tool in this way.

Very few are running these things locally. Fewer still creating their own (attributed, responsibly obtained) data sources. What that tells me is this isn’t about the technology that allows this kind of recomposition of data it’s about using (exploiting) the vast sea of information online in a novel way.

-

@Wyatt_H_Knott @futurebird @grammargirl I implore you wonderful people to try local LLMs with an open mind. Like most powerful tools, you get out of it what you put in and it can surely be misused.

Once it's running on an aging, modest computer right next to you, it's hard to not notice the staggering implications for our relationship with society's existing power structures. I have a personal tutor right out of Star Trek that nobody can take away from me or use to spy on me. Apart from all the cool automation and human-machine interaction it opens up, for an increasing number of tasks I can completely stop using search engines.

I think the "AI is just NFTs again but more evil; it just means the inevitable acceleration of billionaire takeover and ecosystem destruction; everyone who uses it is a gullible rube at best and an enemy at worst" clickbait ecosystem is misleading and unhealthy. It fuels conspiratorial thinking, incurious stigma, and a lot of unnecessary infighting.

@necedema @Wyatt_H_Knott @futurebird @grammargirl Have you validated your alleged tutor against a topic in which you are well versed?

My experience has been that, for topics in which I am an expert I immediately spot many errors in the outputs generated.

Misunderstood concepts, mistakenly explained, but with utter confidence and self-assured tone - absolutely no doubt or uncertainty.

So, if for topics in which I'm versed I can see it's not reliable, how could I rely on it for anything else?

-

@necedema @Wyatt_H_Knott @futurebird @grammargirl Have you validated your alleged tutor against a topic in which you are well versed?

My experience has been that, for topics in which I am an expert I immediately spot many errors in the outputs generated.

Misunderstood concepts, mistakenly explained, but with utter confidence and self-assured tone - absolutely no doubt or uncertainty.

So, if for topics in which I'm versed I can see it's not reliable, how could I rely on it for anything else?

@necedema @Wyatt_H_Knott @futurebird @grammargirl Clearly, I'm not the only one that has experienced this.

And trust me, I've tried them. I downloaded and ran Llama 1 as soon as it got leaked, and have consistently messed with various kinds of local LLMs.

I stopped using them because they didn't offer me anything of value.

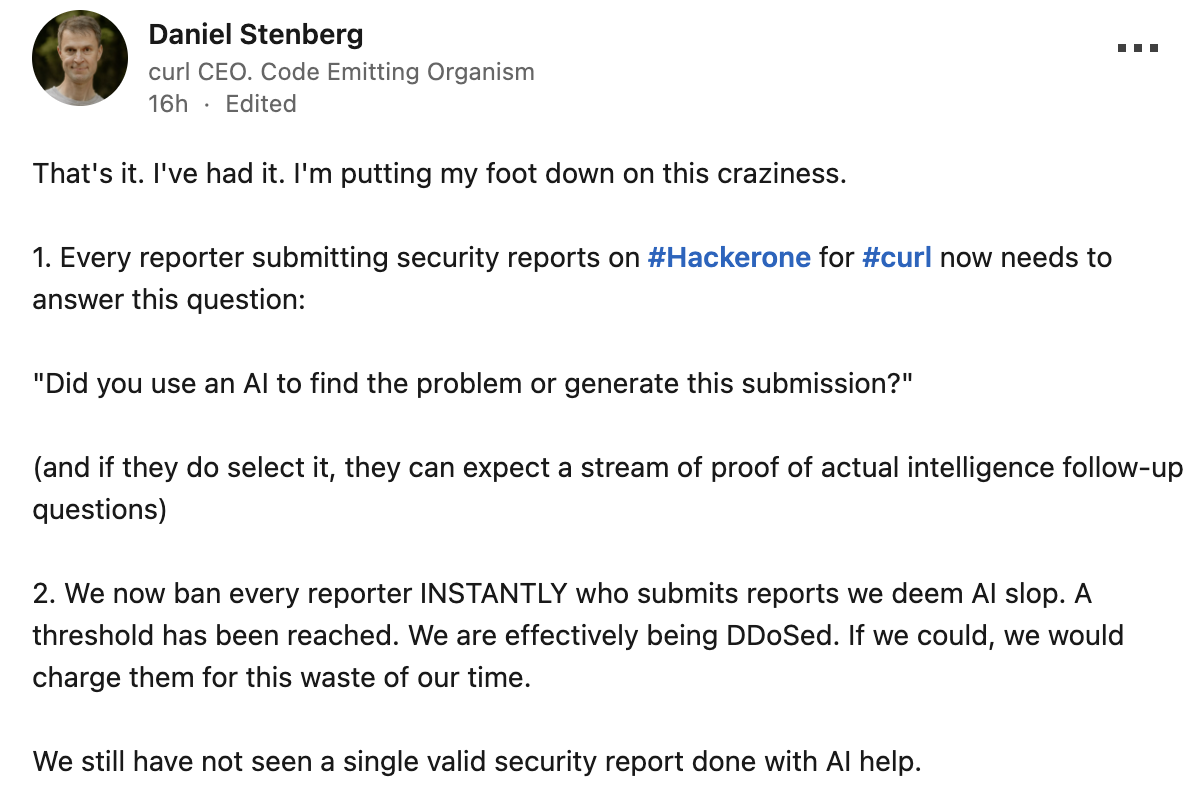

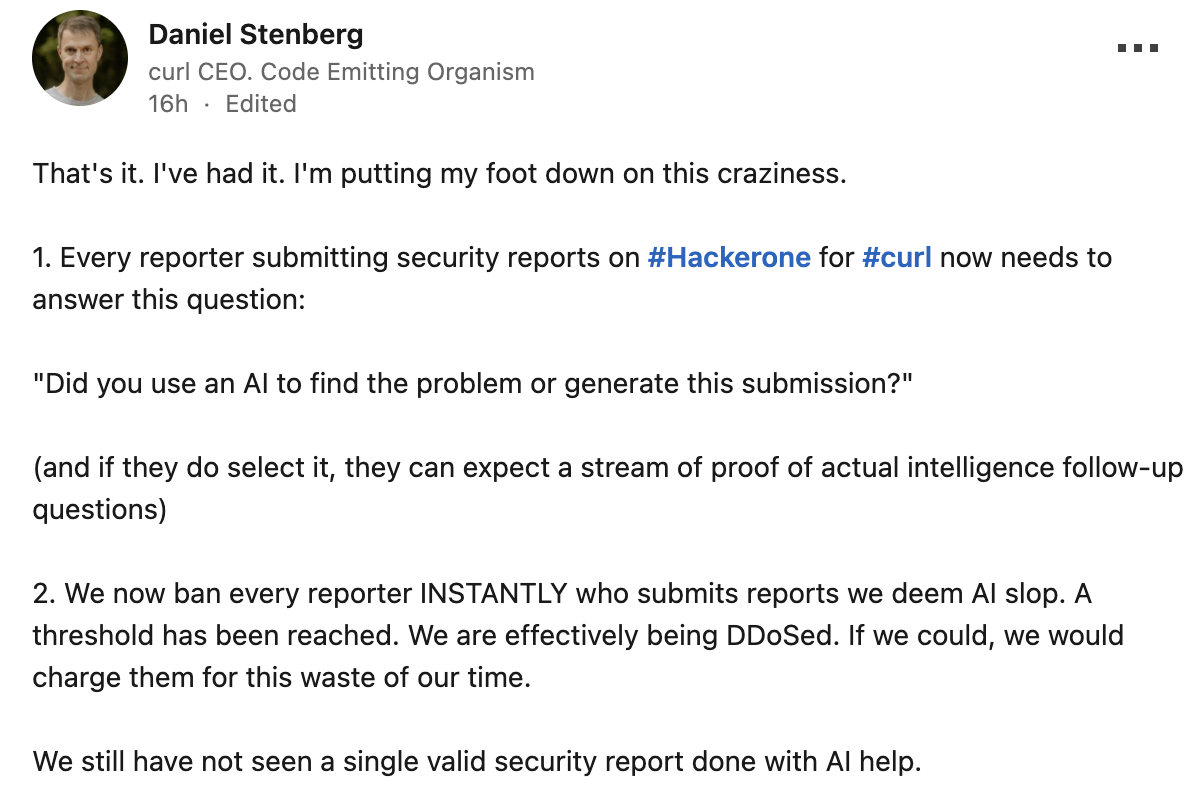

Lukasz Olejnik (@LukaszOlejnik@mastodon.social)

Attached: 1 image AI vulnerability/bug founds and reports is a huge problem. Curl has banned the use of AI-generated submissions via HackerOne because none of it made any sense, and is a waste of resources and time. "We are effectively being DDoSed. If we could, we would charge them for this waste of our time" https://hackerone.com/reports/3125832

Mastodon (mastodon.social)

-

@futurebird @necedema @Wyatt_H_Knott @grammargirl If you have the memory & compute power on your local machine to actually run it, you can download the whole thing and run it locally, completely disconnected. It's conceivable that you could use only local training data, but good luck gathering enough local data. Also, training it would be unbelievably time-consuming if you don't use the cloud and you want anything robust. What's usually done is you fine-tune a pre-trained model on your own data, and then feed it local data and system prompts to make the responses appropriate to your use case.

@hosford42 @futurebird @necedema @Wyatt_H_Knott @grammargirl how can you have all that training data stored locally? if stored locally u certainly can't use it as a search engine for the wealth of material on the www.

i don't understand

-

@hosford42 @necedema @Wyatt_H_Knott @grammargirl

So, almost no one is using this tool in this way.

Very few are running these things locally. Fewer still creating their own (attributed, responsibly obtained) data sources. What that tells me is this isn’t about the technology that allows this kind of recomposition of data it’s about using (exploiting) the vast sea of information online in a novel way.

@hosford42 @necedema @Wyatt_H_Knott @grammargirl

It’s like the sophomoric “hack” for keeping up with all the damned five paragraph essays. Just search on the internet for a few documents with good-sounding paragraphs. Copy and paste chunks of sentences into a word document. Then carefully read and reword it all so it’s “not plagiarism”

This is still plagiarism.

GPT will cheerfully help with that last step now.

-

@hosford42 @necedema @Wyatt_H_Knott @grammargirl

It’s like the sophomoric “hack” for keeping up with all the damned five paragraph essays. Just search on the internet for a few documents with good-sounding paragraphs. Copy and paste chunks of sentences into a word document. Then carefully read and reword it all so it’s “not plagiarism”

This is still plagiarism.

GPT will cheerfully help with that last step now.

@hosford42 @necedema @Wyatt_H_Knott @grammargirl

And yet none of the English and History teachers I know are very worried about this. Because the results of this process are always C-student work. The essay has no momentum, no cohesive idea justifying its existence beyond “I needed to turn some words in”

It’s swill.

-

@Wifiwits @futurebird @Wyatt_H_Knott @grammargirl I'm trying to have a real conversation about an important subject, can you please be a child elsewhere?

@necedema @futurebird @Wyatt_H_Knott @grammargirl as I read here recently, an organic, fair trade, co-operative owned, open source oil rig… is still an oil rig.

-

@necedema @Wyatt_H_Knott @futurebird @grammargirl Have you validated your alleged tutor against a topic in which you are well versed?

My experience has been that, for topics in which I am an expert I immediately spot many errors in the outputs generated.

Misunderstood concepts, mistakenly explained, but with utter confidence and self-assured tone - absolutely no doubt or uncertainty.

So, if for topics in which I'm versed I can see it's not reliable, how could I rely on it for anything else?

@mzedp @necedema @Wyatt_H_Knott @futurebird @grammargirl > Have you validated your alleged tutor against a topic in which you are well versed?

ChatGPT and related programs get things wrong in areas I know well, over and over again.

They've told me about stars and planets that don't exist, how to change the engine oil in an electric car, how water won't freeze at 2 degrees above absolute zero because it's a vapor at that temperature, that a person born in the 700s was a major "7th century" figure ...

over and over again, like that.

But worst of all? All delivered with a confidence that makes it very hard for people *who don't know the topic *to tell that there is a problem at all.

-

@mzedp @necedema @Wyatt_H_Knott @futurebird @grammargirl > Have you validated your alleged tutor against a topic in which you are well versed?

ChatGPT and related programs get things wrong in areas I know well, over and over again.

They've told me about stars and planets that don't exist, how to change the engine oil in an electric car, how water won't freeze at 2 degrees above absolute zero because it's a vapor at that temperature, that a person born in the 700s was a major "7th century" figure ...

over and over again, like that.

But worst of all? All delivered with a confidence that makes it very hard for people *who don't know the topic *to tell that there is a problem at all.

@mzedp @necedema @Wyatt_H_Knott @futurebird @grammargirl Oh, and while I'm on this topic, here's your periodic reminder of something else important:

People in my part of the world (the USA), and very likely those in similar or related cultures, have a flawed mental heuristic for quickly judging if someone or something is "intelligent": "do they create grammatically fluent language, on a wide range of topics, quickly and on-demand"?

This heuristic is faulty -- it is very often badly wrong. Not going to have a long, drawn-out debate about this: it's faulty. The research is out there and not hard to find, if you really need it.

In the case of LLMs, this heuristic leads people to see intelligence where there isn't any. That's bad enough. But it also leads people to *fail*, or even *refuse*, to acknowledge intelligence where it does exist -- specifically, among people who don't talk or write very articulately.

In the specific case of the USA, this same heuristic is proving to be very dangerous indeed, with the federal government wanting to create official registries of autistic people, for example. The focus is overwhelmingly directed towards autistic people who can't or don't speak routinely, and it's *appalling*.

-

@mzedp @necedema @Wyatt_H_Knott @futurebird @grammargirl > Have you validated your alleged tutor against a topic in which you are well versed?

ChatGPT and related programs get things wrong in areas I know well, over and over again.

They've told me about stars and planets that don't exist, how to change the engine oil in an electric car, how water won't freeze at 2 degrees above absolute zero because it's a vapor at that temperature, that a person born in the 700s was a major "7th century" figure ...

over and over again, like that.

But worst of all? All delivered with a confidence that makes it very hard for people *who don't know the topic *to tell that there is a problem at all.

@mzedp @necedema @Wyatt_H_Knott @futurebird @grammargirl Representative example, which I did *today*, so the “you’re using old tech!” excuse doesn’t hold up.

I asked ChatGPT.com to calculate the mass of one curie (i.e., the amount producing a specific number of radioactive decays per second) of the commonly used radioactive isotope cobalt-60.

It produced some nicely formatted calculations that, in the end, appear to be correct. ChatGPT came up with 0.884 mg, the same as Wikipedia’s 884 micrograms on its page for the curie unit.

It offered to do the same thing for another isotope.

I chose cobalt-14.

This doesn’t exist. And not because it’s really unstable and decays fast. It literally can’t exist. The atomic number of cobalt is 27, so all its isotopes, stable or otherwise, must have a higher mass number. Anything with a mass number of 14 *is not cobalt*.

I was mimicking a possible Gen Chem mixup: a student who confused carbon-14 (a well known and scientifically important isotope) with cobalt-whatever. The sort of mistake people see (and make!) at that level all the time. Symbol C vs. Co. Very typical Gen Chem sort of confusion.

A chemistry teacher at any level would catch this, and explain what happened. Wikipedia doesn’t show cobalt-14 in its list of cobalt isotopes (it only lists ones that actually exist), so going there would also reveal the mistake.

ChatGPT? It just makes shit up. Invents a half-life (for an isotope, just to remind you, *cannot exist*), and carries on like nothing strange has happened.

This is, quite literally, one of the worst possible responses to a request like this, and yet I see responses like this *all the freaking time*.

-

@hosford42 @necedema @Wyatt_H_Knott @grammargirl

So, almost no one is using this tool in this way.

Very few are running these things locally. Fewer still creating their own (attributed, responsibly obtained) data sources. What that tells me is this isn’t about the technology that allows this kind of recomposition of data it’s about using (exploiting) the vast sea of information online in a novel way.

@hosford42 @necedema @Wyatt_H_Knott @grammargirl

There's a whole movement of running them locally but it's niche, though the smaller versions are getting better.

But nobody is creating them locally. Fine-tuning with your own. data yes, but that's a little sauce over existing training data.

-

@mzedp @necedema @Wyatt_H_Knott @futurebird @grammargirl Representative example, which I did *today*, so the “you’re using old tech!” excuse doesn’t hold up.

I asked ChatGPT.com to calculate the mass of one curie (i.e., the amount producing a specific number of radioactive decays per second) of the commonly used radioactive isotope cobalt-60.

It produced some nicely formatted calculations that, in the end, appear to be correct. ChatGPT came up with 0.884 mg, the same as Wikipedia’s 884 micrograms on its page for the curie unit.

It offered to do the same thing for another isotope.

I chose cobalt-14.

This doesn’t exist. And not because it’s really unstable and decays fast. It literally can’t exist. The atomic number of cobalt is 27, so all its isotopes, stable or otherwise, must have a higher mass number. Anything with a mass number of 14 *is not cobalt*.

I was mimicking a possible Gen Chem mixup: a student who confused carbon-14 (a well known and scientifically important isotope) with cobalt-whatever. The sort of mistake people see (and make!) at that level all the time. Symbol C vs. Co. Very typical Gen Chem sort of confusion.

A chemistry teacher at any level would catch this, and explain what happened. Wikipedia doesn’t show cobalt-14 in its list of cobalt isotopes (it only lists ones that actually exist), so going there would also reveal the mistake.

ChatGPT? It just makes shit up. Invents a half-life (for an isotope, just to remind you, *cannot exist*), and carries on like nothing strange has happened.

This is, quite literally, one of the worst possible responses to a request like this, and yet I see responses like this *all the freaking time*.

@dpnash Yes, I agree. GenAI can never give answers: it can only suggest questions to ask or things to investigate.

That can be useful, but it requires awareness and technical knowledge to understand the distinction, which is why I don't think genAI was ready for a broad public release.

But would I be OK with a doctor using genAI on an anonymised version of my lifetime medical records to spot possible missed patterns? Yes, I think so.

-

@dpnash Yes, I agree. GenAI can never give answers: it can only suggest questions to ask or things to investigate.

That can be useful, but it requires awareness and technical knowledge to understand the distinction, which is why I don't think genAI was ready for a broad public release.

But would I be OK with a doctor using genAI on an anonymised version of my lifetime medical records to spot possible missed patterns? Yes, I think so.

@david_megginson @dpnash @mzedp @necedema @Wyatt_H_Knott @futurebird @grammargirl

A model like you're talking about most likely would be some form of https://wikipedia.org/wiki/Convolutional_neural_network , and bear about as much resemblance to OpenAI's offerings as giraffe does to a housecat.

-

@david_megginson @dpnash @mzedp @necedema @Wyatt_H_Knott @futurebird @grammargirl

A model like you're talking about most likely would be some form of https://wikipedia.org/wiki/Convolutional_neural_network , and bear about as much resemblance to OpenAI's offerings as giraffe does to a housecat.

@Orb2069 @david_megginson @mzedp @necedema @Wyatt_H_Knott@masto.host @futurebird @grammargirl My own take on valid uses of this stuff:

If by "AI" we mean something like "machine learning, just with a post-ChatGPT-marketing change", then the answer is "oh, absolutely, assuming the ML part has been done competently and is appropriate for this type of data." And there are plenty of uses in medicine I can imagine for *certain kinds* of ML.

IF by "AI" we mean "generative AI", either in general or in specific (e.g., an LLM like ChatGPT), then the answer is "hell no, absolutely not, please don't even bother asking" for most things, including everything to do with medicine, however tangential.

The one single good general use case for "generative" AI is "make something that looks like data the AI has seen before, without regard for whether it reflects anything real or accurate." Disregarding known issues* with how generative AI is built and deployed nowadays, it's fine for things like brainstorming when you get stuck writing, or seeing what different bits of text (including code) might look like, in general, in another language (or computer programming language, in the case of code). But it's absolutely terrible for any process where factual content or accuracy matters (e.g., online search, or actually writing the code you plan to use), and I put all medical uses in that category.

* Plagiarism, software license violations, massive energy demands, etc. That's an extra layer of concern that I have quarrels with, but even without these, bad factual accuracy is a dealbreaker for me in almost all scenarios I actually envision for the stuff.