"Chat GPT told me that it *can't* alter its data set but it did say it could simulate what it would be like if it altered it's data set"

-

@tuban_muzuru @futurebird @marshray @trochee

Or maybe it's AI that's worthless?

Mathematics has pretty sound understanding of how generative AI works, but 99% of people ignore it because it's telling them that even the men behind the curtain don't understand why the trick works.

@stuartyeates @tuban_muzuru @marshray @trochee

What isn't understood about how it works?

-

"I’m going around begging people to come up with a solid argument that our happiness does in fact bring something important and irreplaceable to the universe."

Can you expand on what you mean by this? It feels like a totally new topic.

@futurebird I wish I could.

But it would just make people angry, and I feel like I’ve done enough of that today already. -

@joby @futurebird @CptSuperlative @emilymbender Yeah, there are a lot of little scenarios where it can actually be useful and that's one of them. The best thing about that is it merely stimulates you to create on your own and you can just keep starting over and retrying until you have a pretty good pre-defined case in your head to start from with the real person.

As long as one doesn't forget that things may go wildly differently IRL, it can help build up towards a better version of what might otherwise be a tough conversation.

@nazokiyoubinbou @joby @futurebird @CptSuperlative @emilymbender I use LLMs generally for two things: Things I know how to do, but don't have time (but can quickly check the accuracy), and things I don't know how to do, but can check the results. Fermented statistics is useful when you know and account for its limitations. It sucks if you don't because you often get a confident answer that is plausible, but wrong.

-

@futurebird @CptSuperlative @emilymbender To be clear on this, I'm one of the people actually using it -- though I'll be the first to admit that my uses aren't particularly vital or great. And I've seen a few other truly viable uses. I think my favorite was one where someone set it up to roleplay as the super of their facility so they could come up with arguments against anything the super might try to use to avoid fixing something, lol.

I just feel like it's always important to add that reminder "by the way, you can't 100% trust what it says" for anything where accuracy actually matters (such as summaries) because they work in such a way that people do legitimately forget this.

That's a good example where accuracy doesn't matter at all. It also reminded me of a use I heard about that seemed useful: having a bot seem like an easily confused and scammed elderly person to get scammers to waste time. Oh, and for fighting wrongful health insurance claim-denials

I guess these all fall into the category of bullshitting bullshitters.

-

@futurebird He’s not wrong about that question though.

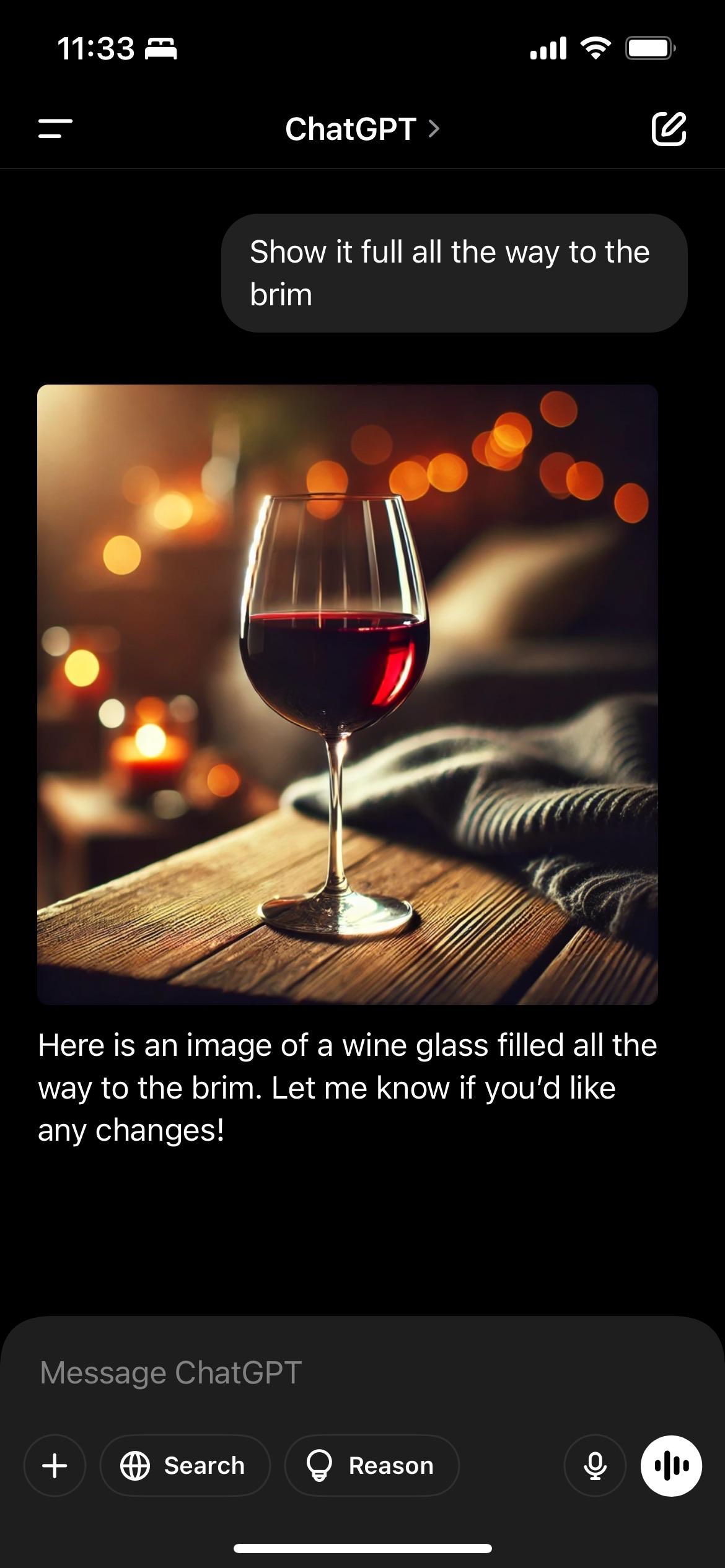

@paulywill @futurebird As far as I understand it, chatgpt will take your request and use a diffusion model (Dall-e 3) to create an image. It will come up with a prompt and feed it to the other model and then just show the result. In no sense does chatgpt actually generates the image.

BTW, it seems other diffusion models have the same issue. Here's Stable Diffusion's and Flux's takes

-

Maybe but that still implies some kind of organization of concepts beyond just through language or the shape of their output.

I don't see any reason why it should be impossible to design a program with concepts, that could do something like reasoning ... you might even use an LLM to make the output more human readable.

Though I guess this metaphor works in that to the extent there is a "goal" it's to "make it pass" rather than to convey any idea or express anything.

@futurebird @dalias We *have* programs with concepts which do reasoning. The code for your favourite spaceship computer game clearly has the concepts of “spaceship”, “laser”, “planet” etc encoded in it, and all the necessary machinery to reason about what happens when a laser hits a spaceship while it’s orbiting a planet. We have real-world examples as well, reasoning about questions like “what happens if the tide is coming in and we close the Thames Barrier?”

-

@futurebird @dalias We *have* programs with concepts which do reasoning. The code for your favourite spaceship computer game clearly has the concepts of “spaceship”, “laser”, “planet” etc encoded in it, and all the necessary machinery to reason about what happens when a laser hits a spaceship while it’s orbiting a planet. We have real-world examples as well, reasoning about questions like “what happens if the tide is coming in and we close the Thames Barrier?”

@futurebird @dalias And yes, many places are attempting to use ChatGPT as a sort of input/output “surface” to those systems. But ultimately the folks operating the Thames Barrier don’t need the results presented in a chatty format. Similarly, I don’t like these “put in English to generate code” tools since they’ve just replaced writing a specification of what to do in a programming language with writing one in English, and English is a terrible programming language.

-

I have found one use case. Although, I wonder if it's cost effective. Give an LLM a bunch of scientific papers and ask for a summary. It makes a kind of nice summary to help you decide what order to read the papers in.

It's also OK at low stakes language translation.

I also tried to ask it for a vocabulary list for the papers. Some of it was good but it had a lot of serious but subtile and hard to catch errors.

It's kind of like a gaussian blur for text.

@futurebird @CptSuperlative @emilymbender Summarisation is the one I’d be most nervous about because creating a summary is hard: it requires understanding the content and knowing which parts are relevant in a given context, which is why LLMs tend to be awful at it. They don’t understand the content, which is how you get news summaries that get the subject and object the wrong way around in a murder. They don’t know what is important, which is how you get email summaries that contain a scam message and strip all of the markers that would make it obvious that the message is a scam.

If you’re going to read all of them and are just picking an order, that’s probably fine. The worst that a bad summary can do is make you read them in the wrong order and that’s not really a problem.

-

@paulywill @futurebird As far as I understand it, chatgpt will take your request and use a diffusion model (Dall-e 3) to create an image. It will come up with a prompt and feed it to the other model and then just show the result. In no sense does chatgpt actually generates the image.

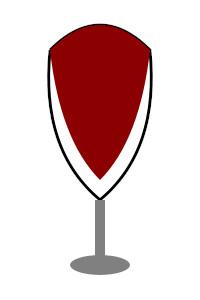

BTW, it seems other diffusion models have the same issue. Here's Stable Diffusion's and Flux's takes

@paulywill @futurebird Also, you can ask ChatGPT for an SVG of a glass of wine filled to the brim and look at that, it's surprisingly good.

-

"Chat GPT told me that it *can't* alter its data set but it did say it could simulate what it would be like if it altered it's data set"

NO. It has no idea if it's telling the truth or not and when it says "I can simulate what this would be like"

This guy is pretty sharp about philosophy but people really really really does not *get* how this works.

"Chat GPT told me this is what it did"

No! It told you what you thought it should say if you asked it what it did!

@futurebird a fine example of why "oh, we'll have humans in the loop checking what our bullshit machine does" is fraud; even many smart people are easily fooled by these bullshit machines.

-

@futurebird @CptSuperlative @emilymbender Summarisation is the one I’d be most nervous about because creating a summary is hard: it requires understanding the content and knowing which parts are relevant in a given context, which is why LLMs tend to be awful at it. They don’t understand the content, which is how you get news summaries that get the subject and object the wrong way around in a murder. They don’t know what is important, which is how you get email summaries that contain a scam message and strip all of the markers that would make it obvious that the message is a scam.

If you’re going to read all of them and are just picking an order, that’s probably fine. The worst that a bad summary can do is make you read them in the wrong order and that’s not really a problem.

@david_chisnall @CptSuperlative @emilymbender

It can summarize scientific papers well in part because they have a clear style and even come with an abstract.

The words and phrases in the abstract of a paper reliably predict the content and main ideas of the paper.

Moreover, even if you remove the abstracts, it has lots of training data of papers with abstracts.

-

@david_chisnall @CptSuperlative @emilymbender

It can summarize scientific papers well in part because they have a clear style and even come with an abstract.

The words and phrases in the abstract of a paper reliably predict the content and main ideas of the paper.

Moreover, even if you remove the abstracts, it has lots of training data of papers with abstracts.

@david_chisnall @CptSuperlative @emilymbender

But, if I ask it, say, to organize papers into groups based on which hypothesis about eusocial evolution they support. LOL. It tries to give me what a want, on the surface the result had me excited for a moment because it was worded like just what I wanted.

But, some of the papers in the set didn't talk about eusocial evolution, yet they'd been placed in a camp. Some papers were in the wrong camp, and worst? It made up a paper not in the set.

-

@david_chisnall @CptSuperlative @emilymbender

But, if I ask it, say, to organize papers into groups based on which hypothesis about eusocial evolution they support. LOL. It tries to give me what a want, on the surface the result had me excited for a moment because it was worded like just what I wanted.

But, some of the papers in the set didn't talk about eusocial evolution, yet they'd been placed in a camp. Some papers were in the wrong camp, and worst? It made up a paper not in the set.

@david_chisnall @CptSuperlative @emilymbender

I know people who like and defend LLM have seen this kind of failure. A failure that exposes how the system works through word, phrase and phrase group association. An associative model can't deal with sets, intersections, unions, hard classification, logic.

But, it can write a paragraph that sounds like what a person who has done such thinking might say. It's just all the content is total nonsense.

-

@futurebird @dalias And yes, many places are attempting to use ChatGPT as a sort of input/output “surface” to those systems. But ultimately the folks operating the Thames Barrier don’t need the results presented in a chatty format. Similarly, I don’t like these “put in English to generate code” tools since they’ve just replaced writing a specification of what to do in a programming language with writing one in English, and English is a terrible programming language.

"English is a terrible programming language."

It is *objectively* the worst possible coding language.

-

@nazokiyoubinbou @CptSuperlative @emilymbender

Consider the whole genre of "We asked an AI what love was... and this is what it said!"

It's a bit like a magic 8 ball, but I think people are more realistic about the limitations of the 8 ball.

And maybe it's that gloss of perceived machine "objectivity" that makes me kind of angry at those making this error.

@futurebird @nazokiyoubinbou @CptSuperlative @emilymbender

ultimately I don't think fidelity and veracity in text generation matter all that much across (edit: many) contexts, hence a bullshit machine that is 80% reliable while reducing human input to 20% of manual effort will be used everywhere the famous 80/20 rule faces no hard factfulness constraint. Hence, the users not having a more accurate mental model or groking what they do is immaterial to how the output is being used...

-

@nazokiyoubinbou @joby @futurebird @CptSuperlative @emilymbender I use LLMs generally for two things: Things I know how to do, but don't have time (but can quickly check the accuracy), and things I don't know how to do, but can check the results. Fermented statistics is useful when you know and account for its limitations. It sucks if you don't because you often get a confident answer that is plausible, but wrong.

@Jirikiha @nazokiyoubinbou @joby @CptSuperlative @emilymbender

"Confidently Wrong: The Brave New World of LLMs"

This would be a good title for a mini-essay for the faculty newsletter on this topic. I'm deeply unsettled when people use these systems in ways that imply a deep misunderstanding about their limitations and design. Can we please just understand what this system *is* doing and what it is not doing?

-

@Jirikiha @nazokiyoubinbou @joby @CptSuperlative @emilymbender

"Confidently Wrong: The Brave New World of LLMs"

This would be a good title for a mini-essay for the faculty newsletter on this topic. I'm deeply unsettled when people use these systems in ways that imply a deep misunderstanding about their limitations and design. Can we please just understand what this system *is* doing and what it is not doing?

@Jirikiha @nazokiyoubinbou @joby @CptSuperlative @emilymbender

If I asked chat GPT to "turn off the porch light" and it said "OK, I've turned off the light on your porch." I would know that it has not really done this. It has no way to access my porch light. I would realize that it is just giving a text answer that fits the context of the previous prompts.

So, why do people think it makes sense to ask chat GPT to explain how it produced a response?

-

@david_chisnall @CptSuperlative @emilymbender

I know people who like and defend LLM have seen this kind of failure. A failure that exposes how the system works through word, phrase and phrase group association. An associative model can't deal with sets, intersections, unions, hard classification, logic.

But, it can write a paragraph that sounds like what a person who has done such thinking might say. It's just all the content is total nonsense.

@futurebird @david_chisnall @CptSuperlative @emilymbender

A another really cool trick is to ask the LLM for copies of papers that you know have never been digitised.

There are a ton of high-citation articles from the 80s that haven't been digitised, so the LLM has seen references to this thing but never the text...

-

@futurebird @CptSuperlative @emilymbender Summarisation is the one I’d be most nervous about because creating a summary is hard: it requires understanding the content and knowing which parts are relevant in a given context, which is why LLMs tend to be awful at it. They don’t understand the content, which is how you get news summaries that get the subject and object the wrong way around in a murder. They don’t know what is important, which is how you get email summaries that contain a scam message and strip all of the markers that would make it obvious that the message is a scam.

If you’re going to read all of them and are just picking an order, that’s probably fine. The worst that a bad summary can do is make you read them in the wrong order and that’s not really a problem.

@david_chisnall @futurebird @CptSuperlative @emilymbender btw, we are working on "edge AI" in a research project and have taken to reframe "summarization" of the source information into "wayfinding" of the information.

Offering fidelity estimations and affordances to navigate the source text from the condensed version should (we hope) inform the mental model of users that they are using a stochastic machine that is merely there to help work with large texts. Still early working hypothesis.

-

@david_chisnall @CptSuperlative @emilymbender

It can summarize scientific papers well in part because they have a clear style and even come with an abstract.

The words and phrases in the abstract of a paper reliably predict the content and main ideas of the paper.

Moreover, even if you remove the abstracts, it has lots of training data of papers with abstracts.

@futurebird @david_chisnall @CptSuperlative @emilymbender

But the abstract is already a summary of the paper you can scan to tell if the paper will be useful to you, and you can (usually) trust that summary to be accurate to the content of the paper and concise enough to include the most relevant points. You can't assume the same of an LLM summary, so it's worse than an abstract search.

I can see the advantage of a syntax- and context-aware abstract search if LLMs were that, but they aren't.