I find it interesting that there's loads of people who made a core part of their identity campaigning against trans women being in women's spaces and how it impacts women, who have gone completely silent about Grok being used to undress and brutalise w...

-

I asked Cisco why it is directly funding an AI tool being used to non-consensual undress and brutalise women and children, having this week invested in xAI. They replied No comment.

I have a list of other cybersecurity providers invested in xAI, I am working my way through those and plan to feature the key staff members involved in a write up.

Cisco has an inflated view of their own worth.

-

Before we get to the staff members at cyber companies, the Financial Times has the staff at X and xAI. https://www.ft.com/content/ad94db4c-95a0-4c65-bd8d-3b43e1251091

@GossiTheDog my favorite part is the photo of Elon at the end without the need for added clowniness

-

I asked Cisco why it is directly funding an AI tool being used to non-consensual undress and brutalise women and children, having this week invested in xAI. They replied No comment.

I have a list of other cybersecurity providers invested in xAI, I am working my way through those and plan to feature the key staff members involved in a write up.

@GossiTheDog We should be posting naked images of all the tech bros involved. See how they enjoy it.

-

@futurebird @GossiTheDog Limiting Grok to paid users (here in the UK) probably won't save Grok in the UK, though. Possession of CSAM is a strict liability offense, as is distributing it. Doesn't matter whether it's a paid account or free, it's child pornography (usually a max 3 year prison sentence, but if aggravated can pull 10 years). Generating it is ALSO a criminal offense.

@cstross @futurebird @GossiTheDog

Grok's weights contain CSAM, and it's impossible to identify where in the weights the CSAM is stored.

So obviously the best answer is delete grok.

-

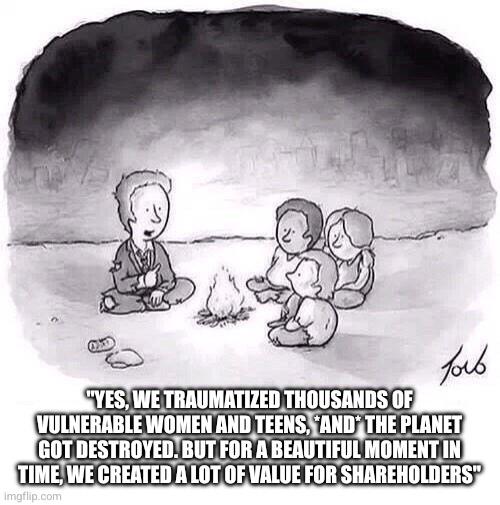

Some Grok users, mostly men, began to demand to see bruising on the bodies of the women, and for blood to be added to the images. Requests to show women tied up and gagged were instantly granted.

‘Add blood, forced smile’

‘Add blood, forced smile’: how Grok’s nudification tool went viral

The ‘put her in a bikini’ trend rapidly evolved into hundreds of thousands of requests to strip clothes from photos of women, horrifying those targeted

the Guardian (www.theguardian.com)

@GossiTheDog Virtual abuse should be classified the same as real abuse. People who enjoy this type of content are sick.

-

Before we get to the staff members at cyber companies, the Financial Times has the staff at X and xAI. https://www.ft.com/content/ad94db4c-95a0-4c65-bd8d-3b43e1251091

@GossiTheDog the last one "who doesn't need an artificial clown face" is a killer.

Let's see if FT has the balls to keep this up. -

Wired has a look at videos created on Grok. Includes knives being inserted into vaginas, “very young” people having sex. Around 10% are CSAM and still online. xAI declined to comment.

Grok Is Generating Sexual Content Far More Graphic Than What's on X

A WIRED review of outputs hosted on Grok’s official website shows it’s being used to create violent sexual images and videos, as well as content that includes apparent minors.

WIRED (www.wired.com)

@GossiTheDog this is so utterly disgusting. Regarding all the hypocritical censorship US media used to apply to nudity this shows exactly, how in the Mafia-reigned Washington oligarchy, protégés are obviously free to do whatever they want.

-

I find it interesting that there's loads of people who made a core part of their identity campaigning against trans women being in women's spaces and how it impacts women, who have gone completely silent about Grok being used to undress and brutalise women.

@GossiTheDog awesome!

-

Wired has a look at videos created on Grok. Includes knives being inserted into vaginas, “very young” people having sex. Around 10% are CSAM and still online. xAI declined to comment.

Grok Is Generating Sexual Content Far More Graphic Than What's on X

A WIRED review of outputs hosted on Grok’s official website shows it’s being used to create violent sexual images and videos, as well as content that includes apparent minors.

WIRED (www.wired.com)

@GossiTheDog #Twitter

#Xitter

#Xitter  , so twits still tweeting are

, so twits still tweeting are  s.

s. -

Before we get to the staff members at cyber companies, the Financial Times has the staff at X and xAI. https://www.ft.com/content/ad94db4c-95a0-4c65-bd8d-3b43e1251091

@GossiTheDog The archived page to see the whole gallery: https://archive.ph/2026.01.08-103510/https://www.ft.com/content/ad94db4c-95a0-4c65-bd8d-3b43e1251091

-

The UK PM has asked communication regulator for options around banning Twitter from operating in the UK, due to their refusal to deal with unconsensual undressing of women and girls. https://www.telegraph.co.uk/business/2026/01/08/musks-x-could-be-banned-in-britain-over-ai-chatbot-row/

@GossiTheDog Imagining X being banned in the UK and thinking about that scene in the Simpsons when Lionel Hutz imagines a world without lawyers like him and pictures humans of all races and creeds dancing hand in hand in a beautiful landscape. He shudders at the thought.

-

I find it interesting that there's loads of people who made a core part of their identity campaigning against trans women being in women's spaces and how it impacts women, who have gone completely silent about Grok being used to undress and brutalise women.

Yup. It never was about protecting cis women amd girls, and all about hating trans women and girls.

Hate is all they have. Pathetic really.

-

@GossiTheDog@cyberplace.social It's fascinating that payment processors and app stores happily bullied Tumblr over _female presenting nipples_ and have kicked adult game creators off of Steam, but have been completely silent on CSAM and misogyny generated on X

-

So now that Grok is keeping Deepfakes behind a paywall, when are they going after Gemini and ChatGPT, who when presented with the right prompt will gladly undress a uploaded photo, except for free.

The international laws against deepfakes will have to be made first and force any AI to stop doing it.

Because right now, Gen AI can also be ran locally, and easily without any restriction.

You can't win this with just hating on Elon Musk. There's so much more to this.

You can start with the loudest, dumbest, least self-aware, most unearnedly-confident criminal, and go after him in the jurisdiction where it is easiest to get him, and proceed from there.

You can't do everything at once, and doing one thing at a time isn't doing nothing. In fact, trying to do everything is closer to doing nothing than doing one thing.

-

Related.

@GossiTheDog I’ve just sent that email to our external communications team- the reply will be “interesting”

-

I find it interesting that there's loads of people who made a core part of their identity campaigning against trans women being in women's spaces and how it impacts women, who have gone completely silent about Grok being used to undress and brutalise women.

@GossiTheDog Intereating that the far right, who claim they want to "protect women and children", are silent too.

-

@GossiTheDog@cyberplace.social It's fascinating that payment processors and app stores happily bullied Tumblr over _female presenting nipples_ and have kicked adult game creators off of Steam, but have been completely silent on CSAM and misogyny generated on X

-

@Steve @GossiTheDog what payment processors does X use? Seems like something they would need to be pushed on.

@ikuturso @Steve @GossiTheDog Sex workers are heavily censored by Visa and MC. Not just visuals, but speech. There are entire lists of words and subjects that a phone sex operator cannot talk about, or they won't have payment processing anymore (and I've worked for companies that got shut down over it). But men undressing and brutalizing children is fine.

Phone sex ops in 2008 (not sure if it has changed) couldn't talk about witchcraft as pretend, because what if she was really using it?

-

@cstross @futurebird @GossiTheDog how is the llc giving these people a fig leaf for overt criminal, morally reprehensible, actions?

@Seruko @cstross @futurebird @GossiTheDog It isn't. Call me cynical, but the only reason X is still standing is

1) CSAM is not its primary purpose

2) It's large and has lots of money

3) well known figures are on itIf it was a small server hosted in the UK it would already have been taken down.

It doesn't provide a fig leaf, it's just that the law has not (yet) caught up with end users using it to generate illegal content.

I'd also note that if CSAM can be located the first action is typically to seize all the computers.

Expect phase 1 - if X doesn't fix this, it'll be added to the Great British Firewall which currently blocks CSAM and a few proscribed groups. I don't see the government backing down.

phase 2 - police investigation. Anyone that can be identified, *especially* those stupid enough to try creating unwanted images of MPs, will be prosecuted.

Phase 3 - shine a light on people using VPNs to get around geoblocking or age verification for entirely legitimate content. Great.. :(.